cross-posted from: https://lemmy.world/post/10921294

Quote from the article:

People are aware of selfie cameras on laptops and tablets and sometimes use physical blockers to cover them,” says Liu. “But for the ambient light sensor, people don’t even know that an app is using that data at all. And this sensor is always on. Liu notes that there are still no blanket restrictions for Android apps.

Remark added by me:

Here, it might interest readers to know that unlike Stock Android, GrapheneOS (GrapheneOS is an Android-based, open source, privacy and security-focused mobile operating system for selected Google Pixel smartphones) provides a sensors permission toggle for each app. According to their website:

Sensors permission toggle: disallow access to all other sensors not covered by existing Android permissions (Camera, Microphone, Body Sensors, Activity Recognition) including an accelerometer, gyroscope, compass, barometer, thermometer and any other sensors present on a given device. When access is disabled, apps receive zeroed data when they check for sensor values and don’t receive events. GrapheneOS creates an easy to disable notification when apps try to access sensors blocked by the permission being denied. This makes the feature more usable since users can tell if the app is trying to access this functionality.

To avoid breaking compatibility with Android apps, the added permission is enabled by default. When an app attempts to access sensors and receives zeroed data due to being denied, GrapheneOS creates a notification which can be easily disabled. The Sensors permission can be set to be disabled by default for user installed apps in Settings ➔ Privacy.

In conclusion, allow me to emphasize another quote from the article:

“The acquisition time in minutes is too cumbersome to launch simple and general privacy attacks on a mass scale,” says Lukasz Olejnik, an independent security researcher and consultant who has previously highlighted the security risks posed by ambient light sensors. “However, I would not rule out the significance of targeted collections for tailored operations against chosen targets.” Liu agrees that the approach is too complicated for widespread attacks. And one saving grace is that it is unlikely to ever work on a smartphone, as the displays are simply too small. But Liu says their results demonstrate how seemingly harmless combinations of components in mobile devices can lead to surprising security risks.

This seems like an entirely academic, theoretical technique with literally zero real world risk, and without any path forward to ever turn it into a practical attack.

I think the last paragraph OP posted really highlights the niche risk it poses. Nobody is going to use it against you, but a state actor could use it against a specific target like a politician or military to develop a more accurate assessment of information they already have been collecting.

The GrapheneOS part of things feels a little baity. I switched from Brave to LibreWolf a year ago over similar privacy concerns, but ultimately all my biggest risks come from breaches that happened before I was even using Brave. Same thing with all the phones I’ve had over the years.

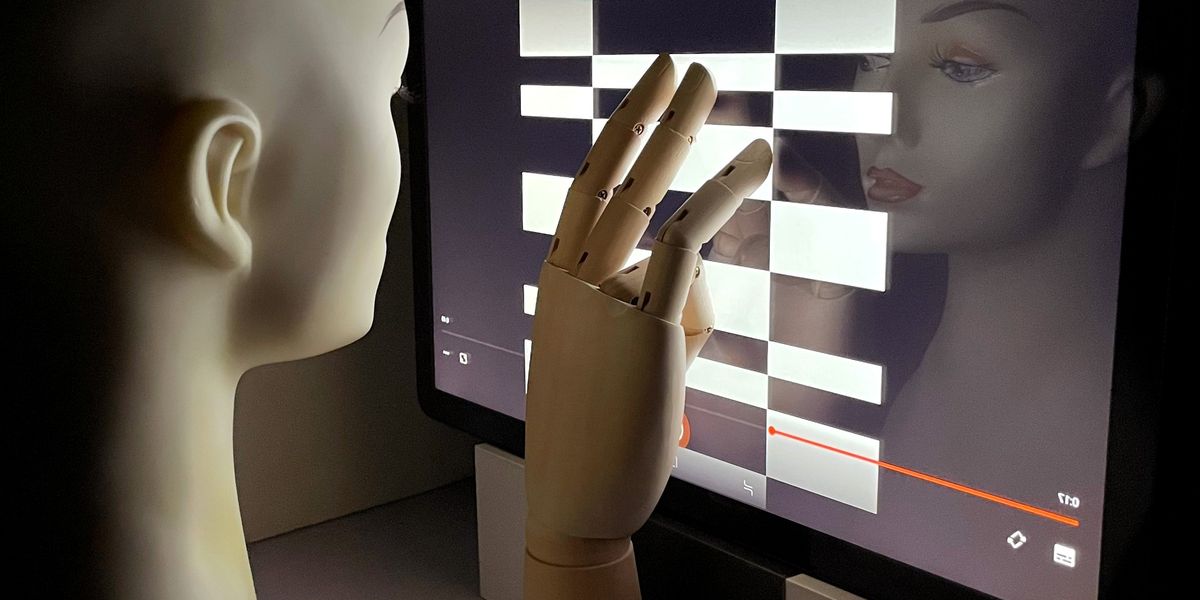

I read the whole article and I think even that is a ludicrous stretch. In order to get a vague image of your hand it requires either several minutes of projecting a precise black and white bright checkered pattern on the screen, or over an hour of subtly embedding varied brightness in a video. The checkered pattern represents a best/worst case scenario for this kind of attack, and even that is completely impractical for anything in the real world. This is literally zero risk for everybody. Forever.

deleted by creator

Indeed, it does.

I am not affiliated with GrapheneOS in any way. I have mentioned GrapheneOS because it is the only Android-based, open source, privacy and security-focused mobile operating system I’m aware of which offers the functionality to disable sensors for each app while also happening to be recommended by PrivacyGuides as the best choice when it comes to privacy and security. If you happen to know of better alternatives, please provide your reasoning and I will gladly list them too.